Managing Sidekiq Workers And Processes

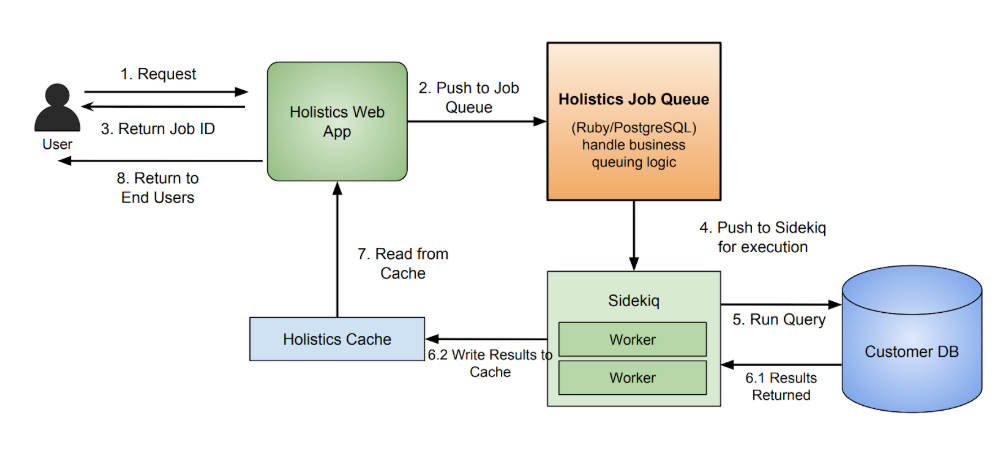

At Holistics we use Sidekiq, the de facto Ruby background processing framework. By combining the reliability of Sidekiq Pro and our own retry logic, no back-end job gets lost or forgotten.

At Holistics, we use Sidekiq, the de facto standard background processing framework for Ruby. By combining the reliability feature of Sidekiq Pro and our own retry logic, we can be assured that no back-end job gets lost or forgotten. Yet for a while, there were process management issues that we had not been able to solve effectively.

Gracefully restarting worker processes

In order to restart a Sidekiq worker, the recommended way is to send SIGTERM, which is the signal sent to a process to request its termination, to the worker process with a pre-defined timeout configured, followed by the spawning of a new process. This works well if all the jobs are short-lived, and are able to be completed within the timeout period. However, not all jobs fit this behavior pattern. For example, we make use of Sidekiq background workers to send complex queries to customers' databases, and the running time of most of these queries cannot be predicted beforehand. If a worker hasn't finished its task by the end of the set timeout period, it would be forced to shut down. With Sidekiq Pro's reliability feature, the job is not lost and would be restarted automatically, but the customer's databases now have two identical queries running, which wastes resources.

Luckily, Sidekiq provides another building block to gracefully restart processes: the USR1 signal. When a Sidekiq process receives this signal, it will stop accepting new jobs and continue to work on its current ones. A Sidekiq process being executed in this state is called 'quiet'. By waiting until all quiet Sidekiq processes finish their jobs, we can then safely restart them with SIGTERM without worrying about unfinished jobs, or running identical complex queries, for example.

Now the question is, how do we know when to send the SIGTERM signal? By looking at the process name from 'ps' command or by getting its status using Sidekiq API, we can see how many jobs are still being executed by a specific worker process. Since we don't know when the number of jobs will reach zero, we need a periodical check on these statuses. This is why we need a Sidekiq process manager script.

The manager script's requirements

The job of this Sidekiq manager script is to:

- Periodically check the status of the Sidekiq processes. If a project is in the quiet state and has no running jobs, terminate the project with the SIGTERM signal.

- After receiving a "graceful restart" command from the administrator, change all currently active processes to 'quiet' status. This should also spawn new processes to handle new jobs. Number of newly spawned processes should be configurable, to ensure that there are enough workers to work on the jobs.

Our requirements are now pretty clear:

- Condition 1: Worker processes are never interrupted by SIGTERM/SIGKILL when there are still running workers.

- Condition 2: There are at least X number of running worker processes at any time to handle the workload. X is configurable.

- Allow for a "graceful restart" of the process during deployment, meaning both conditions 1 and 2 are guaranteed to be met, while ensuring the new code change is in effect as soon as possible

In the case of Holistics, there is another final requirement.

Avoiding the dreadful OOM killer

One of the issues that we encountered was a long-running worker process that will not release memory, causing its memory footprint to increase to the point that it got killed by the OS's out-of-memory (OOM) killer. Thus, our last requirement is:

- Once a worker process reaches a pre-determined memory threshold, it shouldn't accept new jobs (which allocates more memory), and should become quiet.

Designing the management script

Input/output:

The process manager accepts the followings configuration options:

ACTIVE_PROCESS_COUNT: Guaranteed number of active processes. Active here means the worker processes are not stopped or quiet, and can accept new jobs.MEMORY_THRESHOLD: The threshold at which point a process goes quiet and will not accept new jobs. The process manager also accepts the following command:restart: Triggers a graceful restart of the worker processes, typically used during deployment or a hotfix.

Operations:

Every X (say 5) minutes, this algorithm will be executed:

- Scan data from Sidekiq API, 'ps' command or from the process manager (Upstart/Supervisor/Systemd).

- Each process can be in one of the three states: running/stopped/quiet.

- Go through all quiet processes and stop those which have zero jobs running.

- Go through all running processes that exceed the memory threshold and 'quiet' these.

- If

number of running workers < ACTIVE_PROCESS_COUNT, start the stopped ones to ensurenumber of running workers >= ACTIVE_PROCESS_COUNT.

Every time the restart command is triggered:

- Start

ACTIVE_PROCESS_COUNTprocesses to handle new jobs. - Quiet all running processes.

Conclusion

With the process management script now in production, we are assured that the background system is reliable, no jobs get re-executed and we can gracefully restart the workers during deployment. In other words, no complaints from customers regarding duplicated job executions, and we can all sleep well at night!

— — — — — — — — — — — —

Having problems finding a simple and affordable data reporting system for your startups? Check us out at holistics.io.

What's happening in the BI world?

Join 30k+ people to get insights from BI practitioners around the globe. In your inbox. Every week. Learn more

No spam, ever. We respect your email privacy. Unsubscribe anytime.